What's New in Surgical AI: 3/19/2023 Edition

Vol. 17: Be the Match

Welcome back! Here at Ctrl-Alt-Operate, we sift through the world of A.I. to retrieve high-impact news from this week that will change your clinic and operating room tomorrow.

It was the start of the March Madness Tournament this week, and not the college basketball contest. No no, this week we saw nearly every A.I. behemoth - including MSFT 0.00%↑ , GOOG 0.00%↑ , OpenAI, Anthropic, and more release new models, products or applications. This matters for the MD of today, and matters even more for the MD of tomorrow.

This describes our week quite accurately:

Lucky for you - we’ll do the heavy lifting, keeping track of the who’s who, what’s what, and bringing it back to the OR, clinic, hospital, or wherever else you find yourself delivering care.

Table of Contents

📰 News of the Week

🤿 Deep Dive:

🐦🏆 Tweets of the Week

🔥📰News of the Week:

If you think A.I. is real, you need to read this edition. There aren’t too many weeks where things “feel different”. This is one of those weeks. Albeit, those weeks are happening more and more often. Exciting times!

Apple (AAPL 0.00%↑ ) , who have been characteristically slow and methodical in their release of technology, may be coming out of hibernation. Allegedly, they will be launching a new Siri + a new AI Headset in June. Being able to augment your reality in real time is depicted here for interior design, but what about preoperative planning?

What about helping a patient visualize outcomes, either for motivation or realistic expectations? If built from a clinical-principles first mindset, these tools can be powerful tools in the clinicians and surgeosn armamentariam.

Okay, augmented reality was the appetizer. On Tuesday, OpenAI released the much anticipated GPT-4, the newest upgrade to their line of Large Language Models (of chatGPT fame). GPT-4 is fucking awesome.

We hate making you do work, but you owe it to yourself to watch Greg Brockman (President and Co-Founder of OpenAI) demo GPT4. Amongst the crazy sh*t he did:

Copied and pasted a section of the tax code and had it do his taxes.

Copied and pasted and entire websites worth of code documentation and had GPT-4 create its own chatbot (discord)

And then the craziest part,

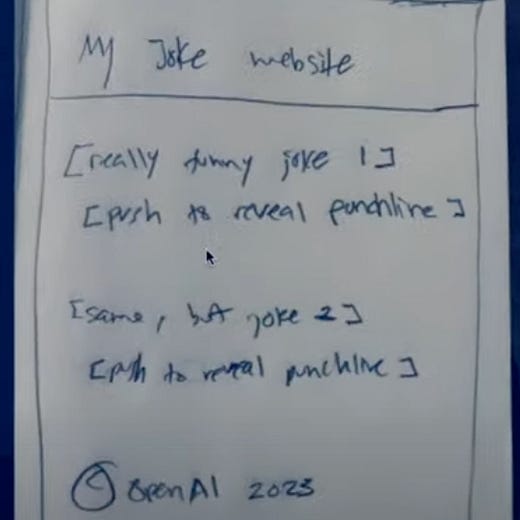

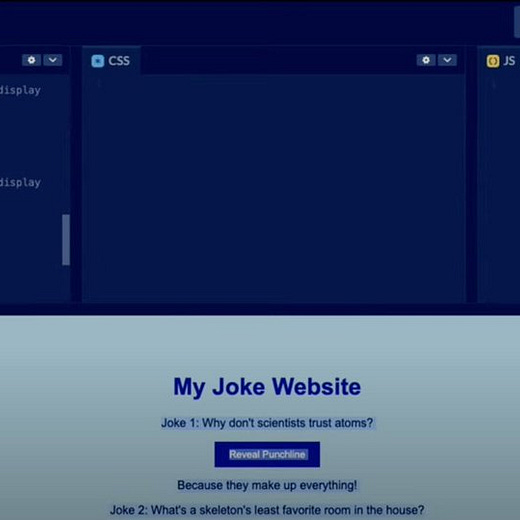

He drew a website on a physical piece of paper, took a picture of it, sent it to gpt4, and it created the website for him 🤯

This will have implications for everyone. But we’re just getting started. Let’s round up the news and then get to the implications at the end.

Not to bury the lead, but Google also showed their newest clinical large language model achieved >85% on the USMLE… uhh…yeah thats really good, and probably puts the AI somewhere in the 260’s...

Separately, Google announced their entire workspace would be powered by AI. We’ve all been there - long day in the OR and now you’re 12 emails behind on a thread; let Gmail summarize A.I. it for you no problem. This, amongst many other features.

Ok lets keep going:

Because Microsoft could not be undone by Google, they announced Office 365 would be powered by an AI copilot.

Academics, Surgeons et. al.,

Save your clinical duties, where perhaps A.I. must meet a significantly higher bar for it to be implemented, or there need to be institutional strongholds lifted, etc. Nonetheless, these tools can help you become a better administrator, teacher, academician, leader and innovator.

Here’s on example: we all have data sitting on our computers, messy, waiting to be analyzed. We can now chat with a bot which can write a script to clean that data for us. Never done it before? Ask how to do it. Don’t know which windows to open, which buttons to click, which applications you need? All you have to do is ask.

This newsletter is already going to be long, so we’ll leave it at this for now. Don’t worry, we’ll go into how to implement these tools in detail in the future. For now, a poll:

🤿 Deep Dive: Learning with AI

For those of you living outside of the bizarre job non-market of medicine, this week was “Match Week” for final-year medical students in the US. After months of interviewing at dozens of hospitals for privilege of being absurdly underpaid for 3-7 years, these soon-to-be MD’s approach the Hogwarts sorting hat of the National Residency Matching Program algorithm and are promptly assigned into fictional houses (called residencies) that will forever change their adult lives. This is a high stakes match: putting a Slytherin into House Hufflepuff could be disastrous. Meanwhile, third year medical students are gearing up for influential fourth year rotations. Second year students are taking Step 1 of the USMLE licensing exam. Spring is a stressful time for all medical students, so if you see one of them out there, give them a hug. And, before you ask, Dhiraj did great!

Here at Ctrl-Alt-Operate, we are all learners, whether in formal degree and training programs or in self-directed and lifelong study. This week I’ll ask you to invest 10 minutes of your time thinking about how we can use AI to improve knowledge and technical procedural learning in medicine and surgery.

First, I should specify some contours of the clinical learning environment that affects all physicians from medical students to practicing physicians.

Students of medicine (at all levels) are adult learners. Adults learn differently than children, with unique needs and characteristics. Some of these principles include self-directed learning, the need for relevance and applicability of new knowledge to the learner's life or work, and the use of experience and prior knowledge as a foundation for new learning. Adult learning theory also recognizes the importance of a learning environment that fosters collaboration, feedback, and reflection.

Across domains of human performance, expertise and mastery are best developed through deliberate practice. The definition of deliberate practice, developed by K Anders Ericsson is the oft-forgotten prepositional phrase of the “10,000 hours rule” popularized by Gladwell and others. Expert performance results from a specific style of purposeful and focused practice. Deliberate practice involves identifying and working on specific weaknesses, receiving feedback, and constantly pushing oneself beyond current skill levels. Through deliberate practice, individuals can continuously improve their performance and achieve mastery in a particular domain. Although the “10,000 hours rule” is well known, most forget that “10,000 hours” is a noun adjunct, not the thing itself. Deliberate practice is the thing we care about. Clinical medicine is lacking here…

Clinical medical practice does not provide deliberate practice. In clinical care, the primary focus is on treating patients. Learning may occur as a byproduct of repeated high stakes performances, but playing Carnegie Hall every day is not the same thing as rehearsing. Deliberate practice requires timely, tailored feedback but busy professional often lack the time, training or incentives to provide guidance, coaching and skill-building. Deliberate practice requires structured sub-task repetitions, like playing scales on a piano, but the variety and unpredictability of medical situations rarely permit “pausing” a patient’s care to repeat a sub-task until it is mastered. Moreover, overt mistakes are often subsumed into a culture of silence and shame resulting in missed opportunities for improvement.

Might we be able to harness AI to deliver deliberate practice to adult learners in clinical medicine? Two key facts.

AI’s are being baked into major software platforms. Our prior section showed how both Gsuite and MSFT 0.00%↑ office are receiving deployments of their organization's respective LLMs.

Large Language Models (particularly multimodal vision-language models that we covered last week) are likely to achieve expert human performance on tests of medical knowledge. Although GOOG 0.00%↑ press releases leave a great deal to be desired, the trend is undeniable. Models are sequentially approaching human expertise in the limited publically available test data (allegedly segregated from the training dataset).

But our question this week isn’t “how good are LLM,” but “how can we work with LLM to get better,” so let’s keep diving further. What should adult learners in search of deliberate practice do with a medical knowledge expert, text generator and parser, and chat-interface in their pocket?

Start by understanding the observed behavior of March-2023-caliber foundation models. As always, Cowen and Tabarrok have been leading public intellectuals in this corner of the internet, and their tutorial-spotlight on how novice LLM users can use AI in learning economics is influential.

Having understood the general observed behaviors of LLM, here is what doctors need to know in order to learn clinical medicine using AI assistance. Some of these strategies can be performed using chatGPT today, others need building (attention: computer science team mates!)

Accelerate conventional knowledge management and spaced repetition strategies using chat interfaces. Use AI to generate questions for an article you are reading, since answering questions improves knowledge retention. Want a mnemonic for something you need to remember? Ask chat GPT for the mnemonics and the cloze deletions that you can copy-paste or reformat into .csv for direct import.

Multiple mnemonics can be generated but the end-user has to decide if the underlying medical facts are true, false, useful or useless.

AI for medical information support: Inline comparison of literature based on clinical medical notes. Imagine a note-writing interface the presented the most clinically relevant guidelines to the medical note you were writing, based on blue-ribbon information sources. Having trouble remembering the components of the CHADS2-Vasc score or when to use it? What about those latest American Diabetes Association guidelines ? Want to save those guidelines for later review, or future use? Information support as opposed to “decision support” (a truly horrific euphemism) represents the best of AI-human systems, and can be turned into knowledge acquisition and retention systems. Save the articles you rely upon, and then use the tools from part a. above to turn those articles into questions. Too difficult? Summarize the articles and tie them into the cases you are actually seeing using the generative interface.

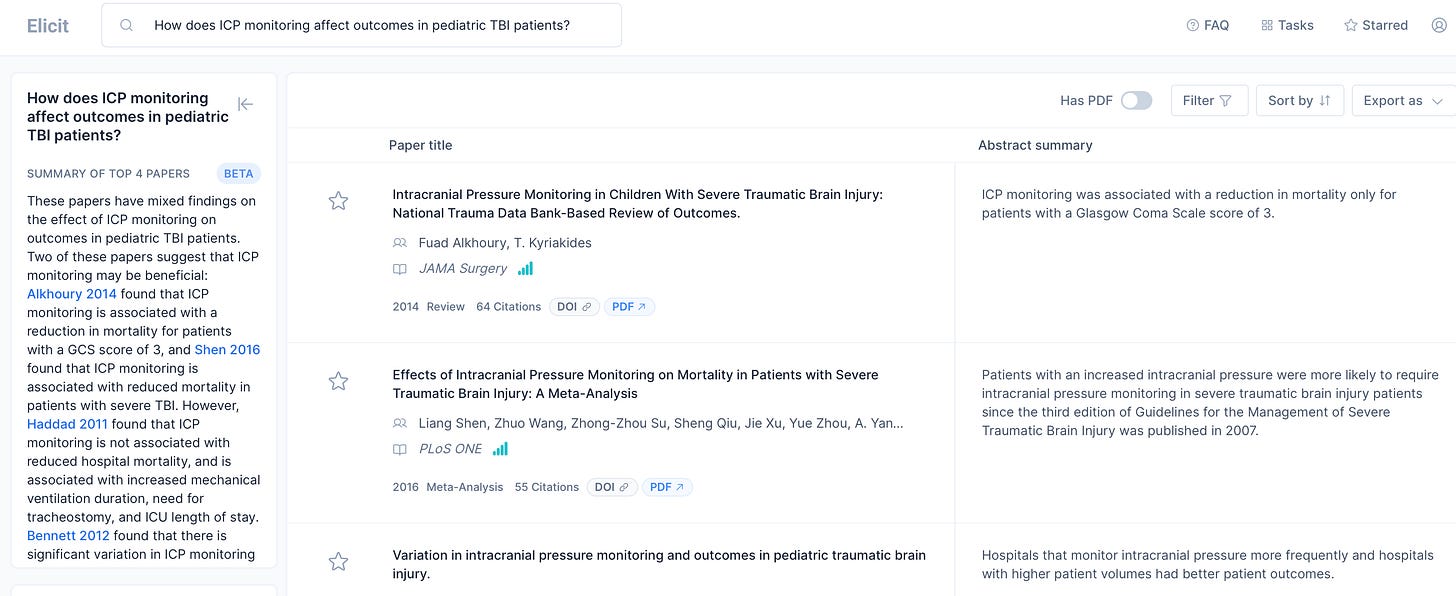

Exploring the edges with AI: Let’s say you’ve identified an area where you want to improve, but don’t exactly know where to go. For example, pediatric TBI. You might want to test your knowledge with questions, but where can you find them? Consider using LLM-generated questions as a starting point for further exploration, recognizing that the LLM is not a gold standard reference text, but a probabilistic representation of language.

Now, you can’t ask LLM’s for references and trust them directly, as this is a major source of confabulation/hallucination. But you can use elicit.org (screenshot below), Google Scholar or the venerable PubMed search engine.

Customized Knowledge Course Creation: You can then ask the LLM to generate knowledge content to brush up on topics that you want to understand better. Again, the key is to view these as possible questions rather than the gospel of medical management. However, it is a reasonable starting point.

Generating Learning Apps with no-code AI assistance. We will touch on these techniques in upcoming newsletters. For now, you should know that innovations that can dramatically reduce the time and expertise required to build your own learning and knowledge management tools are already in production.

For any educators in the audience, Ethan and Lilach Mollick have a nice guide here

I am not even in AI and I am tired of how rapidly it is moving/advancing at least from the outside perspective.

Great post!