What's New in Surgical AI: June 4, 2023

Vol. 27: Generative AI and Surgical AI, Oh My!

Welcome back! If you’re new to ctrl-alt-operate, we do the work of keeping up with AI, so you don’t have to.

This week, Time magazine led the doomer charge with its cover (“The end of humAnIty”, but we told you to expect this in our last issue. NVDA 0.00%↑ hit $1Trillion market capitalization. ADBE 0.00%↑ released a new version of photoshop that “magically” fills in or replaces features of images using generative AI. And much more…

This week we will get deep into generative AI, from fake celebrity videos to synthetic training data for health care data. It’s a critical area that is all the rage - and we’ll take it on. We’ll also take a look back at the state of surgical AI in our deep dive.

As always, we are grounded in our clinical-first context, so you can be a discerning consumer and developer. We’ll help you decide when to bring A.I. into the clinic, hospital, or O.R.

Table of Contents

The News: 📰Another mindboggling week in AI

Deep Dive: 🤿The state of surgical AI research - an update

The News: Another Mindboggling Week in AI, zooming in on Generative AI

Many things happened this week in AI (AI-powered discovery of new antibiotic against acinetobacter might be particularly cool), but I want to start to draw a line through one common thread of progress: generative AI. There are many ($billions of venture funding) reasons to think more about generative AI in medicine, and there are many exciting use cases, but we have to start from the basic definition:

Generative AI is a family of neural network models that generate (synthesize) output based on a given input sequence. Unlike traditional neural networks, which report back a prediction or classification of the input sequence, generative models output a new sequence . Generative models are modality specific (text/image/video/audio), but can have different input and output modalities (translate text to image, etc). Examples of this include GPT’s, Clip, DALLE, stable diffusion, midjourney, and so on.

Here’s why generative AI models are so important, right now, in medicine:

Synthetic Data Is All the Rage in Biomedicine. Machine learning algorithms are data-hungry. Most published models outside of medicine rely on billions or trillions of data points to create their models: GPT-3 was trained on billions of websites, each with hundreds or thousands of individual text tokens. And nobody knows the training dataset for GPT-4, but we can believe it would only be larger. Collecting biomedical data is hard: data holders are reluctant to give up their data but often lack the competency to study it. Few marketplaces for medical data exist - and when they do, they are seldom truly open. But what if we could create more training data for models, without waiting to accrue patients or perform costly diagnostic tests?

Enter the world of Generative AI. You’ve heard about generative AI: fake videos of will smith eating pasta, political leaders saying things they never said, and of course GPT itself. But what if these videos are good enough to train AI models, at least at a first approximation (pre-training), that will get close enough to allow a smaller dataset to do the rest of the work (fine-tuning)?

In a recent paper, Dolezal et al created synthetic pathology slides of tumors using a neural network design called a conditional generative adversarial network (cGAN), a type of image generator that uses the image labels as part of its training process. Their network generated realistic imaging ( below), but most interestingly, that these synthetic images could be used to train pathologists to improve their skills in a 1 hour training scenario (72.9% to 79% classification accuracy on real images). Of course, their skills improved most when looking at real images that were similar to those where the network was “best” at generating. This creates a fascinating educational paradigm where AI can be used to augment education by providing an increased number of relevant examples of rarely seen pathology, helping pathologists “hone in on” the features that the network finds “most relevant” to its image generation. In essence, the AI is “learning” what visual elements best differentiate between tumor types, and then “teaching” that insight back to the pathologists.

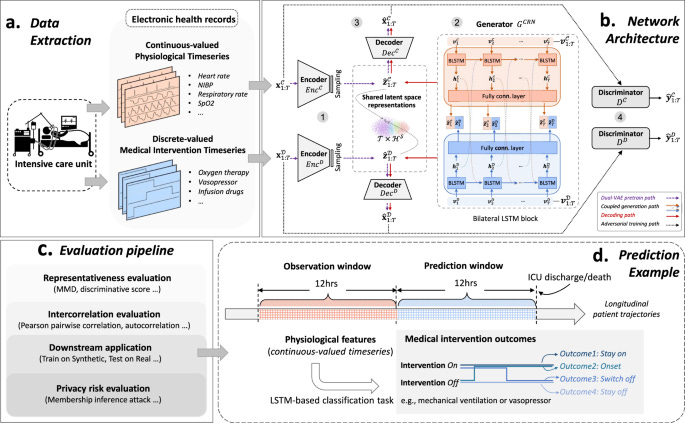

And similarly, for EHR data, Li et al did similar work with time-series data. Time series data, comprised of multiple patient measurements across a time interval, is traditionally difficult for GANs. A space very much worth watching

Generative AI can read your mind. We are huge fans of the medarc research organization concept. One of their first papers generates viewed images from brain activity. Yes, that is what it sounds like: you watch an image in the fMRI scanner, the neural network sees your fMRI changes in blood oxygenation level dependent-signal, and it predicts the image you are looking at with > 90% accuracy. Next up (already in the works): scalp electrode recordings.

And if generative AI can see the world as you see it, what use might that be?

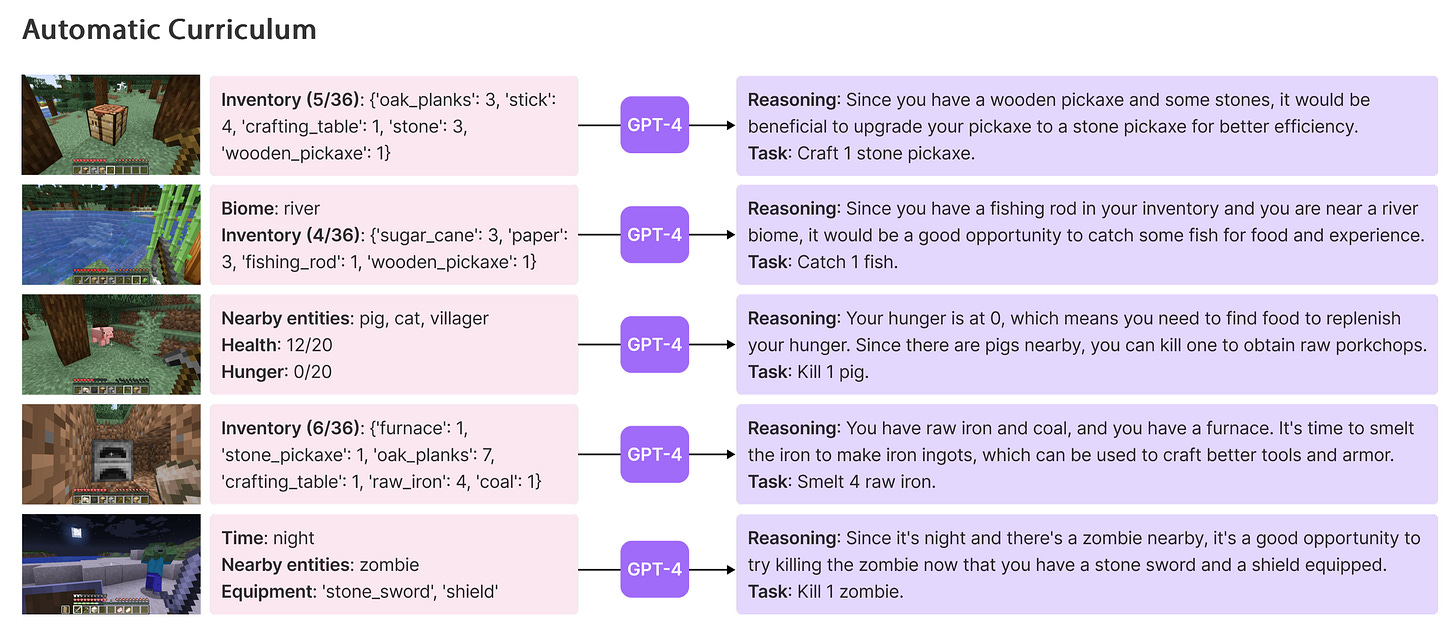

Well, a world-beating team led by friend of the substack and Caltech Prof. Anima Anandkumar recently connected an AI model trained to play the videogame Minecraft to GPT-4.Voyager (minecraft). The AI now travels farther, is better at creating objects, and performs significantly better at playing minecraft. It can troubleshoot its own code much better using GPT-4 compared to GPT 3.5. Other models (STEVE-1) can see images from the video game, interpret them, and act on text based instructions (“go chop some seaweed”).

What does this mean for surgeons? Imagine if, instead of creating a minedojo (giant training library of all human knowledge about minecraft), we created a surgical video training ground for AI models. Sure, you’d need an underlying physics engine for surgery itself, which is the obvious missing link here, but with games like “surgeon simulator” and an increasing industry of serious games for healthcare, how much farther away could this be?

And what would happen if we took a real moonshot at creating a $250M “virtual surgery world” that simulated a single speciality like brain surgery or abdominal surgery, similar to the cost of the most expensive video games ever created. There’s every reason to think it might costs much less (especially if the new Unity AI engine is as good as promised). And even if it did cost us $2B to create accurate simulations covering the vast majority of surgery. That’s only 0.05% of the US healthcare spending for one year. There’s never been a reason to do this - until right now. Today, if we had a virtual surgical universe, we could change the face of surgery forever.

Let’s get building.

🤿 Deep Dive: The State of Surgical A.I. Literature

This week, our deep dive looks at the latest issue of Neurosurgical Focus, a monthly publication from the Journal of Neurosurgery with article submissions all focusing on one theme each issue. This month's issue is, unsurprisingly, machine learning.

For our non-neurosurgical audience, fear not, this will not be a deep dive into the world of clinical outcomes research in spine surgery, or different mRNA markers in glioblastoma. But, we will use these articles to highlight where surgical A.I. research is (or was at the time of submission), and we’ll highlight some common threads and insights that might be true in your field as well.

Machine Learning as a Predictive Tool

One common thread is the use of machine learning as a predictive tool. Park et al., explored the use of ML models to predict postoperative improvements in pain in patients with cervical myelopathy. Another article highlights the development of nonlinear k-nearest neighbors classification algorithms to identify patients at high risk of increased length of hospital stay following spine surgery. A team from Jefferson found that in meningioma, radiographic features from MRI could be used preoperatively to predict Ki-67, a postoperative histopathological marker which portends poor prognosis. Knowing a patient’s Ki-67 beforehand could guide patient-specific treatment strategies, surveillance plans or timing of surgery.

Understanding and Interpreting Complex Biological Systems

One of the best use cases for A.I. in my book has to be the consolidation and processing of incredibly large amounts of data. I’m talking human genome level volumes of data and finding meaning out of it in an automated process. One study delves into the metabolomic differentiation of tumor core versus edge in glioma. The implications for taking any N number of metabolic compounds and determining their correlation to a particular functional tumor status are non-intuitive to humans by default. Separately, another article explored how interhemispheric connections maintained language postoperatively- addressing the issue of postoperative aphasia in glioma patients. This is a) an important problem and b) again a perfect example of how problems with lots of complex inputs are well suited for using ML models. The concern we always run into, however is: how do we know we’ve captured all the right inputs.

Bridging the Gap: From Predictive Models to Holistic Clinical Solutions

The potential of machine learning in neurosurgery, as demonstrated in the articles from the JNS Focus, is undeniably powerful. The ability to accurately predict surgical outcomes, interpret complex biological systems, and identify nuanced patterns in patient data is revolutionizing the field. But to borrow a phrase from the world of tech startups, we're still very much in the "proof of concept" phase. We're demonstrating that machine learning can solve isolated problems, but the real magic happens when we integrate these solutions into the larger clinical workflow.

To make machine learning truly transformative, we need to transition from proof of concept to proof of value. This involves not just predicting surgical outcomes, but also demonstrating how these predictions can inform clinical decision-making, tailor patient care, and improve health outcomes. As researchers and clinicians, we should focus on developing comprehensive systems that embed machine learning tools within the clinical environment. These could take the form of decision-support systems that provide real-time insights to clinicians or patient management tools that personalize care plans based on predictive algorithms.

How you ask? I think the answer is simple: clinicians need to become builders. The more we have surgeons and clinicians tinkering with software, taking models and putting them into a user interface they can actually interact with, or just playing with the model code to see how different parameters or datasets influence outcome, the closer we get.

If you don’t have a technical background, that’s okay. A.I. tools like chatGPT, Ghostwriter and Copilot can give you all the help you need to take you from zero to a working proof of concept. Surgeons have a rich history of invention and tinkering. Just because software requires a new set of skills, doesn’t mean that culture of innovation should change.

Rapid Research Dissemination: Embracing the Pace of Innovation

Another takeaway: artificial intelligence is advancing at an unprecedented pace. New algorithms, models, and applications are being developed every day. But while the field is evolving rapidly, the process of disseminating this research is not keeping up. Traditional academic publishing does not keep up.

Before you all start defending journals and their need for peer review, ask yourself: what’s the longest you’ve waited between getting a manuscript accepted and its publication. For me it was about 11 months.

I can not even imagine how the world of A.I. will look 11 months from now. Why would any research I publish be relevant then?

The solution? We need to create new avenues for rapid, yet rigorous, research dissemination. Medrxiv and pre-print services are fine, but oftentimes adversarial reviewers make papers better, and plenty of times things just don’t deserve to be published in the first place. How do we create a system where reviewers are 1) incentivized to review, 2) incentives to review quickly, 3) there is a high bar for entry but 4) there is rapid turnaround with near zero time from acceptance to dissemination.

Is anyone working on this problem? Let us know at ctrlaltoperate@gmail.com

Feeling inspired? Drop us a line and let us know what you liked.

Like all surgeons, we are always looking to get better. Send us your M&M style roastings or favorable Press-Gainey ratings by email at ctrl.alt.operate@gmail.com