Welcome back! Here at Ctrl-Alt-Operate, we sift through the world of A.I. to retrieve high-impact news from this week that will change your clinic and operating room tomorrow.

This week we will dive deeper into the use of computer vision models for surgical performance assessment by explaining the strengths and limitations of the models and methods, at a level that surgeons and clinicians can understand. We’ll give you a rundown of our trip to Montreal for Surgical Data Science sessions and a visit to the virtual reality and simulation lab at MNI. The war of words surrounding the potential risk that AI poses to human existence has been heating up, the AI hype cycle is going full speed ahead, and regulators are taking notice.

As always, we’ll do the heavy lifting, keeping track of the who’s who, what’s what, and bringing it back to the OR, clinic, hospital, or wherever else you find yourself delivering care.

This week, you’ll notice that some twitter links are a bit off due to the substack-twitter feud. Ultimately, the feud may even be good for our newsletter as it will encourage us to focus on topics more interesting to our readership, but the twitter images will be less “clickable”. So you’ll see a URL beneath them. We will keep you posted.

Table of Contents

📰 News of the Week: Trip Report to Canada and Quick Hits

🤿 Deep Dive: More Than Meets The Eye: Vision Transformers for Surgical Performance Assessment, Part 2.

🐦🏆 Tweets of the Week

🔥📰News of the Week:

Trip Report: Montreal 2023

Last week we took a trip to Montreal for a Surgical Data Science symposium. This organization, led by Lena Maier-Hein and others, has been bringing folks interested in surgery, technology and AI to meet to discuss progress and outline future opportunities in the field. Our meeting focused on the needs assessment of surgeons for developing AI systems in the OR, and gave us some new connections to other global leaders in surgical AI.

We then visited the Montreal Neurological Institute labs, including Prof. Rolando Del Maestro (Neurosim Lab), Prof. Louis Collins (Neuroimaging and Surgical Technologies lab), and their teams for a research presentation and tour. We presented our recent updates on surgical performance assessment and learned about Prof. Del Maestro’s process for evaluating VR simulators and building an autonomous surgeon feedback system in VR, and Prof. Collins’ pioneering work in neuronavigation. Dan got his surgical technique graded in both VR and in an ex vivo calfs-brain model of epilepsy resection (he did well, they said). Here’s an example of the recent work from the Del Maestro lab, from first author Recai Yilmaz MD PhD.

Quick Hits

Google’s CEO claims that they will start integrating chat into Google search. Feels like a defensive move since OpenAI plug-ins and MSFT 0.00%↑ Perplexity have made significant inroads into the search market for the first time in years.

Meanwhile, ChatGPT sturm-und-drang continues with real developments, anti-hype and hype. Anti-hype: ChatGPT was banned in Italy for “privacy concerns”, and since EU follows a common standard, additional regulatory action (but not necessarily bans) will follow.

Voices calling for a “pause” in AI research are growing louder, and so are the counter-voices. This is an issue that touches on technology, regulation, domestic and even international political concerns. Here’s Tyler Cowen, polymath-economist (marginalrevolution.com is a daily read of mine):

Stanford released their “State of AI” update. As readers will know, industry is developing ML models at a scope, scale and pace 10x greater than academia. This is due to cost and hardware requirements, as well as the need to respond to increasing demand for business use cases (instead of academic CS research).

Here’s a take-home chart from Eric Topol showing the rising cost and computational requirements (Floating Point Operations / second) of recent models (GPT 3, chat and GPT4 are not pictured because those details were not released)

BloombergGPT press release: Bloomberg developed an in house, “small” 50B parameter model (1/3 the size of GPT3, GPT4 is unknown). The model was trained on Bloomberg’s financial data over 40 years (web data, news, corporate filings and press releases) as well as public datasets, requiring 1.3 Million GPU hours (A100s, ~$4/hour if purchased on spot instances, they paid less) over 53 days. For a “small model”, it performed well on both general tasks and outperformed many existing models on financial question-answering (no surprise, given the training data) and financial headline generation.

Meanwhile, smaller models with excellent performance are being released daily. Vicuna, a 13B open source model that can be trained for $300 (wouldn’t even make it onto the graph above), achieves 92% of chatGPT performance and can run on desktop hardware. It’s trained on user-shared conversations from chatGPT and uses GPT-4 to judge its output.

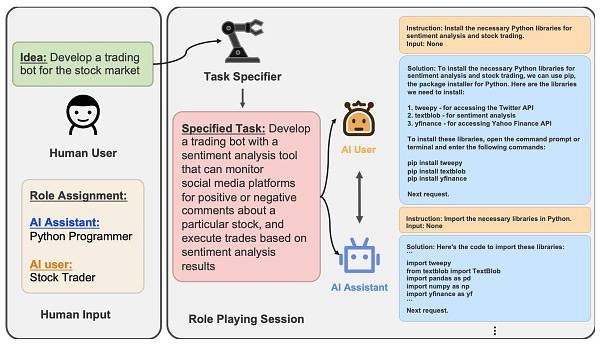

The hype cycle is increasing around “Autonomous GPT systems. ” In one version of this system, the user defines an identity and goal for the chatbot, gives it access to the internet, and allows the chatbot to prompt itself continuously. In this case, a “Chef GPT” is asked to discover an upcoming event and create a new recipe for that event in order to increase net worth, grow social media accounts, etc. Here’s the final recipe (for Earth Day). It’s underwhelming today, but these technologies are going to be used beneath the surface for many applications in the months to come.

In health-GPT, we saw demos like petvet.ai, which purports to provide both diagnoses and treatments for our furry friends in a chat-like interface. I do not endorse this use case and would not trust it with my worst enemies. Physicians and surgeons (human and veterinary alike) will increasing experience patient reports of interactions with these systems due to the largely-unmet demand from patients for information and control over their health information. This trend is only accelerating.

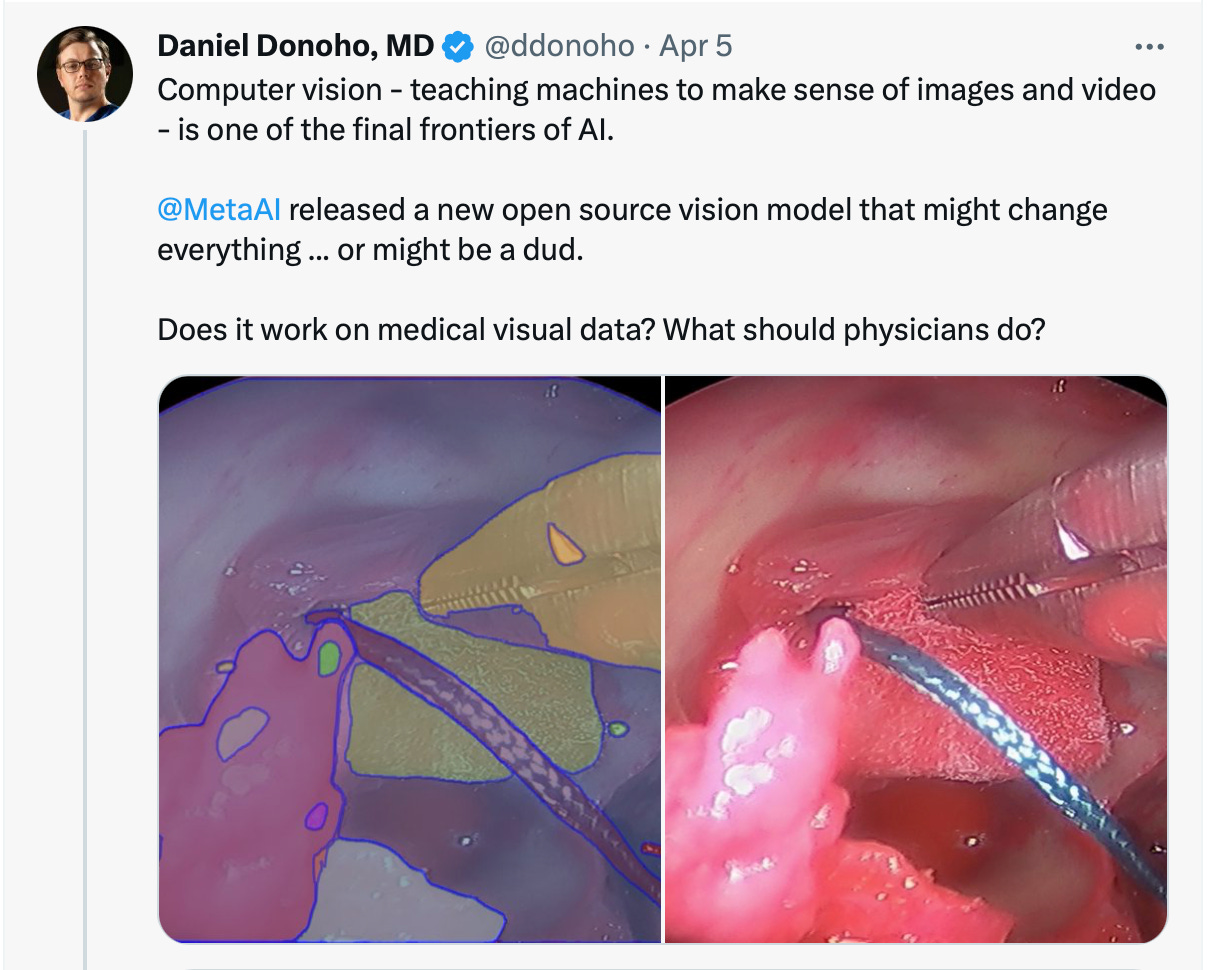

Segment Anything: Facebook AI Research Provides an Open-Source Foundation Model for Image Segmentation

This week, META 0.00%↑ released a foundation model for image segmentation, called "Segment Anything". Image segmentation is the process of classifying one or more pixels within an image as part of an object, like a person, tool, or anatomic feature. Usually, when we are developing models for object detection or segmentation, we have to provide many hundreds or thousands of labelled images to the neural network in order to "train" it what each specific object looks like. Assembling that "training set" requires vast amounts of time, human labelling effort, and money. And then every time you have a new project - you need a new training set. META 0.00%↑ 's model performs object detection and segmentation automatically across any type of image without specific training. That's why it's called a "foundation model": the model's training dataset is so large, and the model is so capable, that it can be applied to new uses with zero- or minimal retraining. You can see an example of this process below, where I used the model to segment previously-unseen surgical video from our publicly available dataset of cartoid artery injury simulations, MRI scans, and other biomedical image sources.

https://twitter.com/visishs/status/1643705449743540231?s=20

🤿 🤖More Than Meets The Eye: Vision Transformers for Surgical Performance Assessment, Part 2.

Our deep dive continues our investigation into the use of computer vision tools for surgical performance assessment. In part 1, we explained the need to 1000x global surgical care, described our rationale for developing a Surgical Artificial Intelligence System, and gave a brief overview of three papers from the larger USC-Caltech group that illustrated a use case of this AI system. In part 2, we delve into the “how”, introducing a higher level understanding of vision transformers so that you can have a general idea when computer vision models might be successful, when they might struggle, and what would be needed for you to get involved in this field.

Ok, that’s nice Dan, now tell me:

What does this system require? (from surgeons and physicians)

When might it fail?

What else can it do / how do I build my own?

How does a neural network work? (without putting me to sleep)

Away we go!

What does this system require: 1. Lots of video. This system was trained on 78 videos divided into 4,774 samples from 19 surgeons. 2. Labelled video. Right now, we need to provide the system with labelled data for training and testing. This requires labels for steps, actions (also called subphases), gestures (surgical instrument movement type) as well as skill assessments (high skill, low skill). This includes hundreds of gestures manually labelled. While the model is now capable of generalizing to unseen video from related procedures with related modalities, it is reasonable to expect a data annotation pipeline to be a critical part of at least validation, if not training the AI system. 3. New use cases both within urology and other disciplines. 4. Continued validation with short and long term clinically relevant endpoints. 5. Pipeline for production. Although not a requirement upon the physicians and surgeons, the current model is a research object that requires design and implementation testing prior to implementation in a clinical workflow.

When might it fail: 1. All AI systems have a risk of bias, and we outlined strategies where we can use training with explanations (TWIX) to ensure that the model and human experts remain aligned. 2. SAIS can ingest very long videos, but the current tasks that it performs are executed against short clips. Long sequences of video are broken down into shorter clips (seconds to a few minutes) for certain tasks within this model. There are challenges and potentially unreasonable computational requirements to ingest videos of many minutes or hours in length. The core belief is that transforming video to sequence / action data enables us to unlock longer surgical procedures. 3. Anatomical understanding. The model doesn’t take patient anatomy or anatomical features as an input. 4. Moving camera perspective. The model was trained and validated on robotic surgery data. We believe that the underlying architecture should work well with other video types, but that’s a testable hypothesis. 5. We don’t know that this model generalizes to all surgical procedures, and there are some surgical procedures which are so variable as to make structured understanding more difficult.

What else can it do / how can I build my own: The research here is in its early days, and there are some interesting possibilities with this architecture. Dani Kiyasseh, the lead machine learning scientist, plans to release the model architecture and code in the coming weeks in his github repo at danikiyasseh.github.io . If you want to build your own… keep reading…

I’m still reading, and I want to learn more about how neural networks function.

Here’s how vision transformers work. First of all, vision transformers are a specialized class of transformers, a neural network that uses a process called self-attention to identify the “most relevant” components of an input (text/image/EEG). Let’s start by understanding the basics of the most common neural network, a multi-layer perceptron, and then understand how that building block fits into a video vision transformer.

Neural Network Basics: Neural networks can accept any type of input data, whether it’s images, text, EEG waves, since it’s all 1s and 0s. These sequences of 1’s and 0’s require specific methods of processing before they are presented to the neural network. This is sometimes called “feature extraction”. Image data might be processed by using methods for detecting edges, textures, colors, or simply by raw pixel values. In a transformer, words are processed into tokens, which are numerical representations of “chunks” of the sequence. Tokens and input sequences have a deterministic relationship - if you know the token, and the tokenizer function that created it, you can always recover the same input from the token.

Here’s an example of character and word tokenizers. The little numbers below the letters or words are the token values, or what the model wants to see.

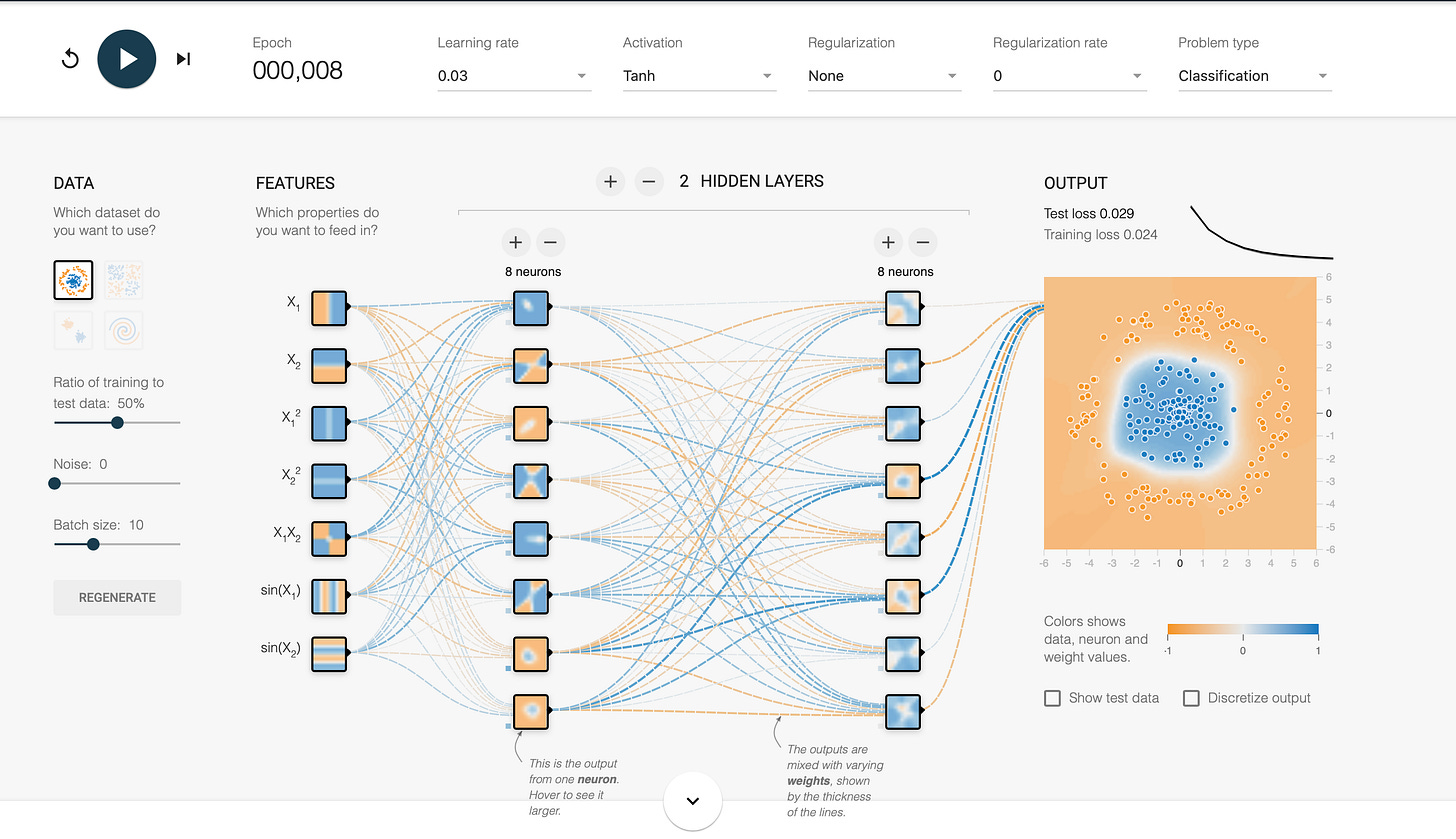

There are several other steps that have to occur (vector embeddings, etc). Now that you’ve got your data “ready”, lets take a look at the neurons in the neural network itself. This kind of network is called a multi-layer perceptron, or MLP.

Neural networks are made up of many (billions, sometimes) of neurons that are organized in layers. Layers in between the input and output layers are typically called “hidden” layers, and they do most of the “work” for the network.

Neurons in one layer are connected to neurons in other layers, but usually* not to neurons of the same layer. Unlike in the human brain, we are most interested in the connections between neurons, not the neurons themselves. Neurons in an AI network typically don’t perform any functions on the data within the neuron. As data passes along the connection between neurons, a mathematical function is performed on the data. This function along the connection between neurons typically contains two “parameters”, or learnable features: a weight and a bias. When data passes from an input neuron along a connection to a receiving, or output, neuron, it is changed by this mathematical function that includes a weight and a bias. The weight and bias are modifiable during the model training process.

So, when you hear that GPT-3 had 175 billion parameters, that means that it had about 87.5 billion connections between neurons, and about 40-80 billion neurons. For reference, the human brain has about 80-100 billion neurons. However, the human brain has a much more complicated set of connections between neurons than most neural networks, with thousands of connections (synapses) from each neuron. In total, the brain has over 100 trillion synapses- 1,000 connections or more per neuron. Once we get to models with hundreds of trillions of parameters, we will truly be at human-scale endeavors. Maybe next year…

You can try this example of an MLP here in a playground.

So how do we train this thing? In a common training method, called supervised learning, you’ll give the network pairs of input data and labels, like a picture of a tool and it’s name (for an object detection task), or sentences with words deleted and the missing words (for NLP tasks). The network then “learns” to output the label based on the the input it “sees” through a training process that adjusts its parameters to improve its performance. In the beginning, the connections between the neurons (weights and biases) might be random or have pre-assigned starting points, so it won’t make very accurate predictions. Let’s train them!

After a pre-specified amount of data (batch) passes through the network (forward pass), the outputted predictions (such as dog, cat) are compared to the true labels (such as dog, dog) using a loss function. The results of that process are then passed back through each layer of the network (backward pass/backprop) in a process that alters the values of the parameters (connections between neurons) to improve the network performance on the next batch (hopefully…).The new parameter values are used for the next batch. Then another batch goes forward through the model, the outputs are scored, and the parameters are updated again in the back prop. Training then continues thusly through the entire data set (epoch) or longer, until any one of a variety of stopping conditions occurs.

Once you have a trained model, you can put it to work by providing it with unlabelled data, and it will provide outputs (like numerical predictions, classifications, words, etc). This is called “inference”. Because the model is only running “forwards” and does not need to update itself, inference requires fewer mathematical operations than training. Thus, inference can be performed on less powerful computational devices than training.

This all sounds very nice, but there’s one problem.

Images are really big compared to text data. Videos contain many images. Even if we had a 100B (or 100T) parameter model, and really good feature extraction, we might not be able to capture the “right” relationships between pixels. So an MLP, in and of itself, won’t get us the right kind of predictions we want. Fortunately, a variety of different types of algorithms and data processing steps have been built around the MLP in order to augment its ability to handle visual data. One of these that we we think will work well is a transformer.

What’s a Transformer, Anyways: The classic example of a transformer is a type of encoder-decoder architecture. Meaning: some data → encoding function → decoding function → some output. Transformers perform a series of mathematical operations on input data in a step called an “attention” layer before the data reaches the “multi-layer perceptron” (MLP) we described above. In an attention mechanism, the elements of an input sequence are compared to understand their relatedness, the relevance of each element to the current sequence, and the importance of each element. The “relatedness” or “importance” of certain elements of a sequence helps the model respond more strongly when it sees those elements. The outputs of the attention layer are then fed forward into an MLP (like above), and an output is then passed into the decoder architecture that provides the model output (a word, object presence, numerical value). There’s a lot more going on here, but the high level understanding is that transformers have the ability to use the relationships between data in a sequence to provide better predictions of subsequent elements in a sequence, and that they inherently utilize this “relatedness” or “expectedness” in their operations. This is referred to as “attention” because someone people believe this is similar to how humans “focus” on the most relevant stimuli to the exclusion of less relevant stimuli. There are many different types of attention (and self-attention), particularly in multidimensional inputs. Which brings us to …

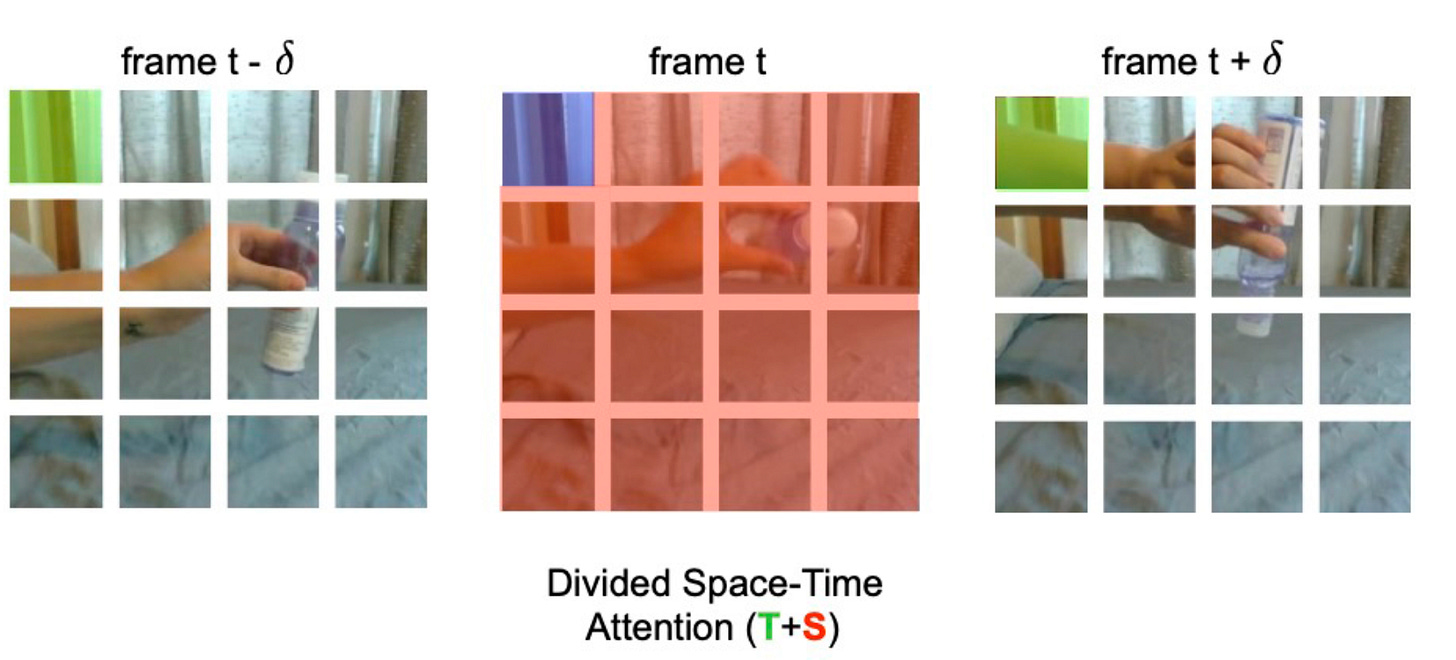

What’s Different about Vision Data: Images are both larger (more 1s and 0s) than text data, but they also contain two spatial dimensions that are important instead of one-dimensional sequence data like words. So, we have to manipulate the 1s and 0s in a particular way to both preserve these relationships and utilize them within the network, in ways that aren’t required for text. Rather than tokenising an image into a short number, we have to perform additional manipulations. For example, we divide each image into different pieces, or “patches” that may be 16 x 16 pixels in size, and place them in a sequence (flattening, linear embedding), then feed them into the network, while keeping track of where they came from (positional encoding). We also have to think about relatedness, for transformer architectures, in multiple dimensions. This is important because transformers quadratically (x^2) increase the number of computational operations based upon the sequence length.

What’s Different about Video Vision Data: Video is the most challenging and resource intensive type of data to work with because it not only has positional (image patches) data but also temporal data. Thus, naively you might need exponentially more mathematical operations for model training and inference, the longer the video, the worse it gets. But people are developing methods to work around this. A good example is the TimeSformer architecture by FAIR, but there are many other/better methods. Here’s their cartoon, you’ll note the patches, some are highlighted in red (indicating that the temporal attention network is giving them higher importance), or into blue/green (indicating greater spatial attention), and the potential interaction between the two. We didn’t use this architecture, but we have some related references below.

What are some examples of video vision transformer models you used in this paper?

https://openaccess.thecvf.com/content/ICCV2021/papers/Caron_Emerging_Properties_in_Self-Supervised_Vision_Transformers_ICCV_2021_paper.pdf

https://github.com/facebookresearch/dino

https://arxiv.org/abs/2104.14294

🐦🏆 Tweets of the Week:

https://twitter.com/npjDigitalMed/status/1644251301809913857?s=20

https://twitter.com/DanStoyanov/status/1642962951823826976?s=20

https://arxiv.org/abs/2303.17651 (ht:Lior tw@AlphaSignalAI)

https://twitter.com/SurgicalAI_Lab/status/1642271032055611393?s=20

https://twitter.com/mattrly/status/1642005132475867136?s=20

See you all next week.

Feeling inspired? Drop us a line and let us know what you liked.

Like all surgeons, we are always looking to get better. Send us your M&M style roastings or favorable Press-Gainey ratings by email at ctrl.alt.operate@gmail.com