What's New in Surgical AI: 4/3/2023 Edition

Vol. 19: More Than Meets The Eye

Welcome back! Here at Ctrl-Alt-Operate, we sift through the world of A.I. to retrieve high-impact news from this week that will change your clinic and operating room tomorrow.

Our deep dive this week goes behind the scenes at three Nature-family publications released this week 🔥🔥 by our extended research family at Caltech-USC where we taught computer algorithms to “see” surgical video and provide rapid, actionable feedback to improve surgical outcomes. If you want to catch up on computer vision, we feature it heavily in our writing (here, here, here, here) because we believe it is the most impactful tech coming to surgery.

As always, we’ll do the heavy lifting, keeping track of the who’s who, what’s what, and bringing it back to the OR, clinic, hospital, or wherever else you find yourself delivering care.

Table of Contents

📰 News of the Week

🤿 Deep Dive: Surgeons Using A.I. to Get Better

🐦🏆 Tweets of the Week

🔥📰News of the Week:

While this week didn’t have quite as much pomp and circumstance as a few weeks ago, there’s still plenty to talk about. Fortunately with less Earth-shattering news, we can hone in on some A.I. news in medicine and surgery.

A.I. has officially become mainstream in medicine - the New England Journal of Medicine (NEJM) recently published a new series outlining the uses of A.I. This is a great primer and perfect for your colleague who says things like “I don’t believe this A.I. stuff” (i.e. the same one who said EMRs would never replace charts). NEJM even has an article dedicated to ChatGPT/GPT4 and how it can be useful in the future for helping patients understand their own conditions, and helping ease administrative burdens. It’s a perfect appetizer for a ctrl-alt-operate subscription 😉

Two papers in the neurosurgical literature were published this week, showing chatGPT performed impressively well on the neurosurgical written boards, and how GPT4 shows significant improvements, getting 83% of questions correct - well enough to pass. For context, the mean score of this neurosurgery specific Q-bank is 69%.

One of the more underrated pieces of news this week was the release of HuggingGPT, a system which uses LLMs (i.e. chatGPT) as a controller to execute different A.I. tasks across different functions. This is an extraordinarily powerful concept. It allows intelligent language models to: intake a goal, select the appropriate models from a menu of options, and interpret the results for you. Study this image, we think it’ll come back again in the future…

🤿 🤖More Than Meets The Eye: Vision Transformers for Surgical Performance Assessment

As astute substack readers may know, both Dhiraj (undergrad, med school) and Dan (neurosurgery training) spent formative years at the University of Southern California. Over the past decade, we placed the first digital planks in the foundation of a surgeon-scientist-industry partnership that is now driving the digital transformation of surgery.

This week, we dive into the research resulting from the leadership and vision of Dr. Andrew Hung, Dr. Gabi Zada, and Prof. Anima Anandkumar, the innovation and skill of scientist Dani Kiyasseh, and the hard work of dozens of teammates in Los Angeles and across the world. We would need a book to do justice to this overnight success more than ten years in the making but we’ve only got your attention for another 83 seconds, so let’s get into it.

We should develop technology to make surgery safer for all people worldwide.

Lack of feedback critically limits surgical improvement. After the completion of formal training and certification, surgeons receive approximately zero performance feedback during the remainder of their careers. Despite living in data-poverty, surgeons work diligently to improve their skills through decades of practice, relying upon their memory, experience and intuition. Without quantitative data and feedback, the individual ability of surgeons to improve is constrained, delayed, and inequitably distributed.

Improving surgical skill is a life-or-death imperative for each of the 300 million patients undergoing surgery each year. Improving surgery is a global health priority: perioperative death is third-leading global cause of death according to WHO.

A 1000x expansion of global surgical care is a human rights imperative: the 1000x disparity in surgery-per-capita between the most limited (Ethiopia) and most available (Colombia) nations results in over 143 million needed surgeries that are not performed, costing millions of lives and trillions in lost GDP and over the next decade.

In order to safely and effectively 1000x global surgery, we require the ability to systematically measure, understand, and improve the basic constituent steps and actions of surgery at scale.

We can fundamentally transform human health by addressing this deep human inequity and trillion-dollar market opportunity using existing technology.

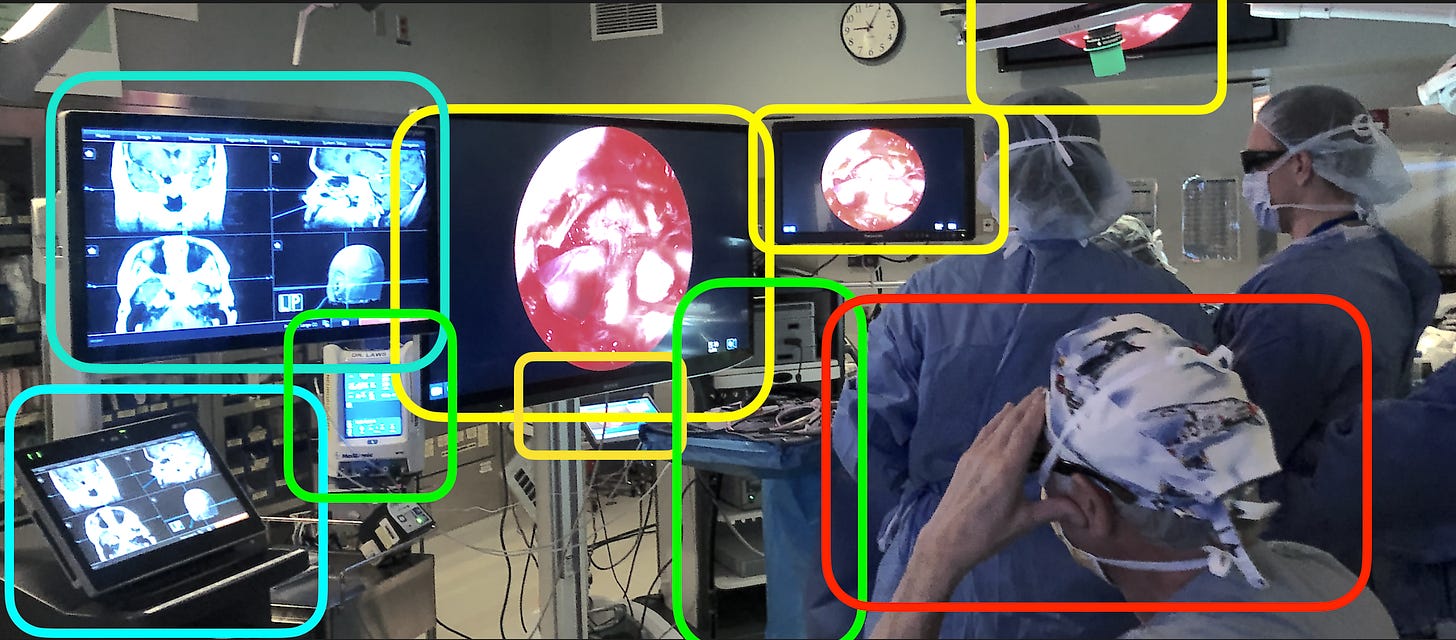

Look at all of these potential data sources. Today, their data fade into the ether.

In past years, we published public surgical video data to train machine learning systems and developed a system that beat human experts in predicting when surgeons would be unable to control life-threatening bleeding during brain tumor surgery.

We have a simple message:

Video of surgery contains life-saving data.

Please stop throwing video away or locking it in a desk drawer.

Here’s what our team can do with surgical video data:

This week, Dr. Hung, Prof. Anandkumar, lead scientist Dani Kiyasseh, and team published results of their deep learning, computer vision-based model that can watch and evaluate the most minute actions of surgery, called gestures, using video data from a global network of hospitals and across several procedures. By studying Dr. Hung’s surgical videos of surgeons performing urologic surgery, Kiyasseh and team developed a Surgical Artificial Intelligence System (SAIS) that can watch new videos it has never seen before, detect steps and gestures, and assess surgeon skill.

Across three papers in Nature-Portfolio journals, Kiyasseh et al. describe our arc of research:

Develop a unified surgical artificial intelligence system capable of reliably detecting surgical steps and gestures, evaluating how skilled the surgeon was at suturing, and generalizing across unseen procedures and hospitals. This unified system can be used to create quantitative representations of what happens during surgeon, qualitative assessments of how well it was done, and can even explain why it assigned a grade (down to the video frame and movement type). This kind of system can address the lack of feedback and assessment in surgery. (Nature Biomedical Engineering)

Improved the surgeon feedback provided by the AI by making it more fair. The AI’s skill explanations were not consistent across all institutions, patient characteristics and surgeon types. We developed a training methodology (TWIX-training with explanations) that explicitly taught the AI which frames were most important using the explanations given by human experts. TWIX keeps the model focused on the parts of surgery that matter, and doesn’t allow it to pick up on extraneous or confounding visual data. TWIX improved model performance and reduced bias compared to a standard attention (vision transformer) architecture. Knowing that the AI’s judgements were more closely aligned with how humans would have viewed a surgical scene improves the trust humans have in the AI’s judgements. (Nature Communications Medicine)

We took a closer look at bias and our proposed solution, TWIX, in the deployment of SAIS to other hospitals in order to detect bias and evaluate our mitigation strategy. We also evaluated whether TWIX actually made the model predictions better, finding that in some cases TWIX led to marked improvement while in others, the overall prediction improvement was small or slightly worse. Our work highlights the importance of looking for bias at the patient, surgeon, hospital and procedure level and developing mitigation strategies (npj Digital Medicine).

I encourage the readership to visit https://danikiyasseh.github.io/blogs/index.html, where lead author Dani Kiyasseh and team maintain general-audience focused narratives of this work.

What this means for surgeons, students and scientists:

We have the tools we need to build the “surgical report card” where you can track your progress and see your improvement over time. We can overcome the feedback gap. We can deploy these technologies at a distance, with fairly low cost, to any surgeon across the world.

There are real challenges in this work, primarily geared around the requirement for large datasets and high quality annotated surgical video. We are developing methods to reduce our reliance on experts, but keeping AI aligned with humans requires a significant amount of interaction and assessment.

We still don’t know the best ways to present this data to surgeons and represent the surgical episode. Will we need a video reconstruction a la HawkEye in MLB? Or is text narrative more efficient? Probably a mix of both…

Lastly, we are actively looking for collaborators in this work. Surgeons can join this effort from any surgical discipline. We welcome all collaborators and contributors. The only thing you need to bring is your available and accessible data.

Sneak Preview of Part 2:

In Part 1, we covered “why” we do this work and “what” it is that we do. Next week we will delve into the “how”, introducing a higher level understanding of vision transformers so that you can have a general idea when computer vision models might be successful, when they might struggle, and what would be needed for you to get involved in this field. Until then …

🐦🏆 Tweets of the Week:

Physicians…if you don’t know how to program and don’t think you can - here’s some inspiration:

And in normal news…

See you all next week.

Feeling inspired? Drop us a line and let us know what you liked.

Like all surgeons, we are always looking to get better. Send us your M&M style roastings or favorable Press-Gainey ratings by email at ctrl.alt.operate@gmail.com