What's New in Surgical AI: 4/16/2023 Edition

Vol. 21: Regulators and the Development of Autonomous Medical and Surgical AI

Welcome back! Here at Ctrl-Alt-Operate, we sift through the world of A.I. to retrieve high-impact news from this week that will change your clinic and operating room tomorrow.

The discussion about artificial general intelligence (AGI) and autonomous AI agents has reached a fever pitch, and this week we will dive in to the medical and surgical implications of AGI and autonomy. Unsuprisingly, calls for regulation have also heightened. We’ll tell you what to watch out for, and why some of the calls might be more self-serving than they seem. We’ll also cover press releases from GOOG 0.00%↑ AMZN 0.00%↑ and others that have broad application to the medical domain. Lastly, our tweets of the week describe a groundbreaking study using human-AI teams to read ICU chest x-rays for acute respiratory distress syndrome (ARDS), a life-threatening condition that requires modification of mechanical ventilation strategies to improve survival. So, who should read the chest X-ray first, a human or an AI? Let’s get into it.

As always, we’ll do the heavy lifting, keeping track of the who’s who, what’s what, and bringing it back to the OR, clinic, hospital, or wherever else you find yourself delivering care.

Table of Contents

📰 News of the Week: Regulators, Mount Up!

🤿 Deep Dive: AutoGPT, What’s the Worst that Could Happen?

🐦🏆 Tweets of the Week

🔥📰News of the Week: Regulators, Mount Up!

The Japanese government announced that they are considering integrating AI into government functions. Coming on the heels of Italy’s “ban” on chatGPT, and growing calls to regulate AI in the United States, the diversity in governmental responses to AI should be closely followed. Based on other heavily regulated industries such as medicine and finance, we can predict an initially incoherent mish-mash of attempted regulation and an ultimate convergence of regulatory regimes necessitated by the increasingly interconnected nature of software and internet technologies. Ezra Klein argues that the industry itself is clamoring for regulation:

Among the many unique experiences of reporting on A.I. is this: In a young industry flooded with hype and money, person after person tells me that they are desperate to be regulated, even if it slows them down. In fact, especially if it slows them down.

Government regulation can be profoundly anti-competitive, aiding market leaders to calcify their position. Much more to come on this front.

AWS finally returned fire in the AI PR wars, dropping a new foundation model-as-a-service tool, Bedrock. If you’ve got your data on AI, and a bunch of lambda functions and integrations running, now you can plug in a foundation model with ease (as long as you don’t want to use GPT, midjourney, Llama, or other leading models). Remains to be seen if they can compete with MSFT 0.00%↑ Azure marketplace

GOOG 0.00%↑ announced another exciting foundation model press release that further increases medical domain generative text performance in response to clinical queries. As with all google products, it remains unclear how this will be productized, validated and ultimately deployed based on the scant press release, but the hype wheels are turning again.

AI Breakfast interviews Zak Kohane:

It's also clear that students are using these tools whether we guide them or not and furthermore, many of our patients are doing the same. Therefore, embracing and understanding this new player in the patient, doctor relationship seems important and worthwhile.

Caption anything: a logical extension of GOOG 0.00%↑ Segment Anything Model + chatGPT to create image labels, brought to you by Chinese mega-media conglomerate Tencent’s research labs. You can try it out in an HF space here. The implications of these technologies for visual question answering and model assisted labelling are only beginning, starting a new wave of tools that unlock visual data for quantitative analysis.

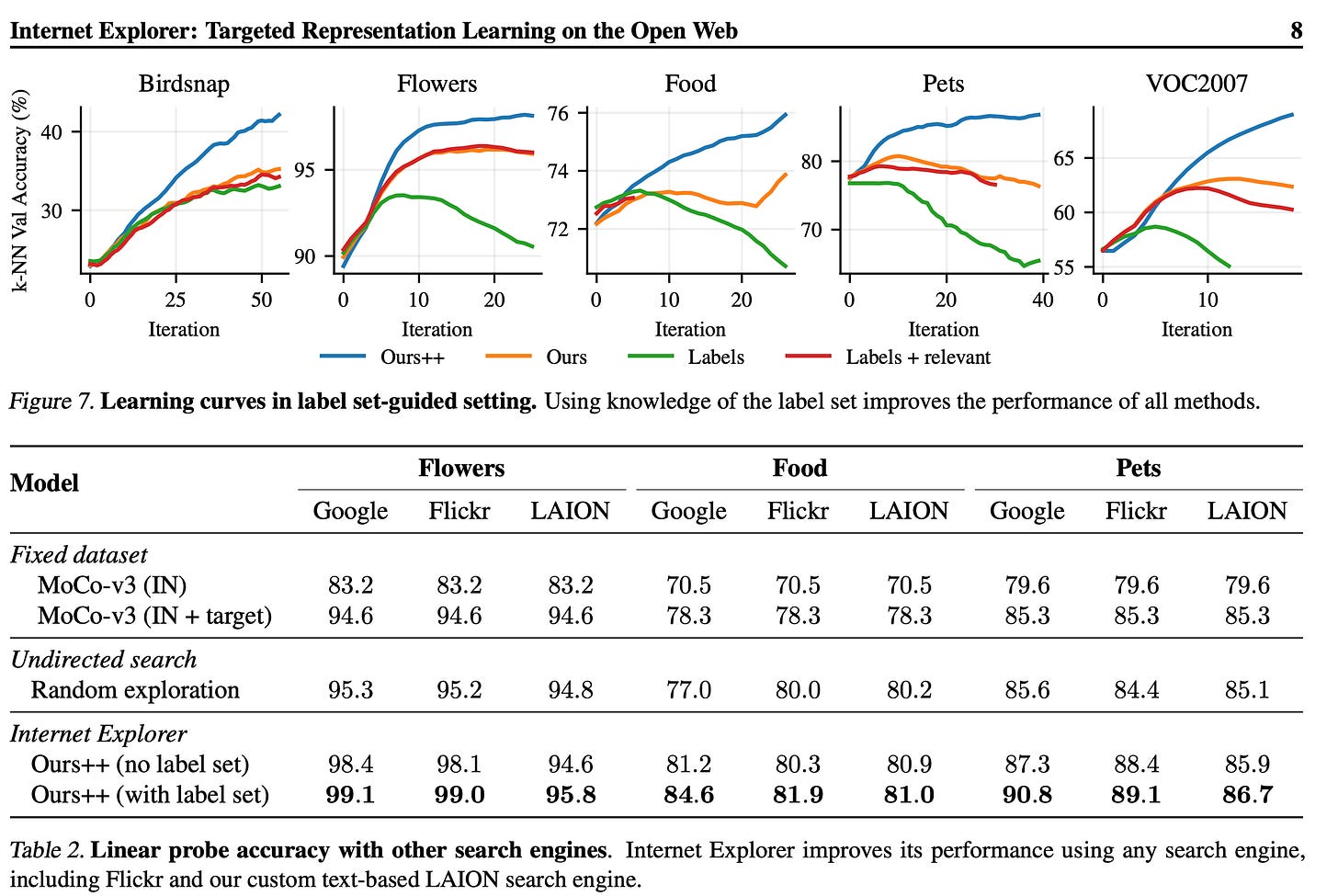

Oh, but you don’t have enough visual data, you say? Not to worry, here comes Internet Explorer: a self-supervised web-connected agent for training data acquisition. You provide a (small, inadequate) model training dataset and the agents scour the web for images that progressively improve the performance of your classification model. For example, say you want to train a model to classify folks who are wearing masks properly (PPE detection was a hot topic for obvious reasons). You provide the model with some images of mask wearers, with labels, and see that your model is performing poorly. Voila:

How does this technology work, you ask? Sounds like it’s time for a primer on autonomous agents

🤿 🤖 The Next, Next New Thing: Autonomous Agents

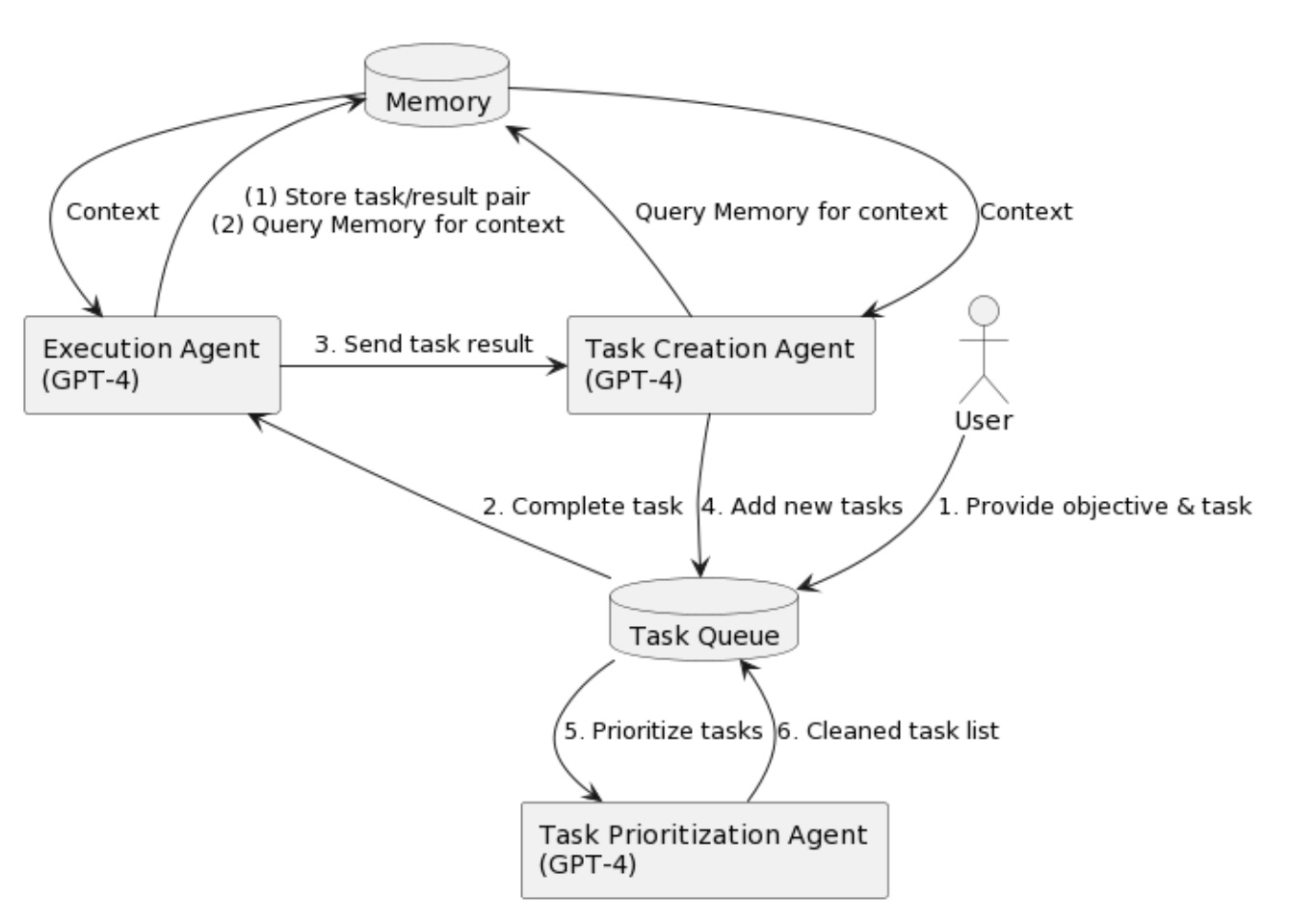

This week's deep dive is on a topic that has exploded in popularity in the past two weeks - autonomous A.I. agents. Unlike traditional chatGPT models, Autonomous A.I. agents work without human intervention. These agents can perform tasks, generate new tasks based on completed results, and prioritize tasks in real-time. Imagine an early version of Jarvis from the Iron Man series. This week’s series of autonomous agents use the popular OpenAI GPT-4 models to access internal systems, communicate with other GPT-4 models, the internet, etc. These "agents" can iteratively communicate with each other, creating tasks, completing tasks, and reprioritizing tasks until the ultimate job is done.

The idea is: if you want a coffee ordered, the autonomous A.I. agent would be able to create a task list that includes: 1) knowing your current location, 2) knowing the nearest coffee shops with delivery, 3) accessing the coffee-shops online ordering system, and 4) placing the order for pickup or delivery.

This is a simple example, but can be scaled to complex tasks which require the creation of task lists, and iteration on that list depending on the results: market research analysis, event planning …

The kickstarter for the Autonomous Agent trend was a paper/model release called BabyAGI (or Baby-Artificial General Intelligence), and it has been the top trending repository on GitHub for the past two weeks. A team out of Google/Stanford also released this paper (image above), where they created a Pokemon-like game simulation and had multiple AI models communicate with each other within the game. The end result: the AI’s independently planned a birthday party. Even crazier, some of the agents said they couldn’t attend! Since then, there have been dozens of product demos that have shown the ability to run these AI agents on your desktop, browser, having them write and execute code, etc. Needless to say, this is just the beginning

The original tweet can be found here.

Somehow, we think these agents will make its way into the healthcare space sooner than expected (our take is +/- 1 month). Accordingly, we wanted to bring it to you, clinician/surgeon/healthcare enthusiast, so you can be prepared for when it inevitably does splash.

In the healthcare space, autonomous A.I. agents have the potential to bring transformative changes, and ultimately reduce the administrative burden placed on the system and on clinicians.

Imagine an autonomous AI agent as your “sidekick” that anticipates your day and identifies problems before they reach patient care.

For surgeons, AI agents could streamline preoperative planning. If the agent knows the types of cases surgeons do, what is contained in each tray, it can communicate with an AI model specializing in operating room scheduling, ensuring all necessary surgical equipment is available for a given case, and with particular circulators/scrub techs. The AI agent could then speak with another AI model responsible for dealing with prior authorizations, negotiating with insurance companies, and scheduling patients. The important principle is that these are all directed by the human at the top level, but the underlying “tasks” are carried out somewhat autonomously.

For clinics, autonomous A.I. agents could help manage in-basket messages and other administrative tasks, allowing them to focus on patient care. An AI agent could communicate with different AI models to sort, categorize, and prioritize incoming messages, and even draft responses or delegate tasks to other staff members. This coordinated approach would help clinicians manage their workload effectively and improve overall productivity.

Improving patient experience is another area where autonomous A.I. agents could make a difference. AI agents could communicate with different AI models to coordinate appointments, and provide real-time updates on test results and treatment options. We all want to answer every question our patients have; AI agents fine-tuned to your clinic workflows and protocols could allow your patients to have access to a triaged version of you, without burdening you or your staff. By streamlining communication using various models, patients would receive a seamless experience that reduces stress and enhances their overall satisfaction. Not to mention the administrative burden which is forcing more and more clinics to shut down, or sell to private equity.

We can use autonomous agents to test novel methods of health-care delivery. The agents themselves can create new patterns and systems.

For example, you could set a policy for these agents that might task them with minimizing clinic wait times, or maximizing imaging accuracy, and place them in a simulated model of your hospital environment. Want to see what happens if you move your clinic from the 4th to the 1st floor, or add an extra scrub tech to the OR rotation? The autonomous AIs would then interact as simulated patients, doctors and nurses to evaluate your change. Some of the AI agents might even be permitted to discover and implement their own optimizations in the “digital twin” simulated environment. Maybe they would be able to come up with useful solutions, just as AI has often (but not always) beaten humans at Go, Starcraft, and countless other games.

We firmly believe clinicians need to lead the deployment of these AI innovations. AI agents can create new challenges in data privacy, model accuracy, and ethical considerations. The less oversight, the more dangerous these models. And of course, the question of clinical accuracy always (rightfully) comes into play. Also, when does this require any sort of FDA oversight?

These models aren’t close to deployment yet. But, if the past few months have taught us anything it’s that the gap between “not there yet” and “there” can be closed at lightning fast speeds. It’s our job at ctrl-alt-operate to keep you well informed, so when an healthcare AI agent shows up in your inbox, you’re primed for what it might (and might not) be able to do.

🐦🏆 Tweets of the Week:

In a clear winner of our coveted golden tweet award for this week, Farzaneh et al describe their method for teaming AI with human reviewers for chest X-rays for the indication of ARDS. Their best paradigm was to send the XR to the AI first, and then if the AI has intermediate confidence in its classification of ARDS or not ARDS, having a physician over-read the study. Similarly, for images where physicians were not confident, the AI outperformed humans. What we really need, of course, is a foundation model for chest-XR that is capable of multi-domain performance rather than a single model trained on a single disease state at a single institution. Stay tuned… https://twitter.com/npjDigitalMed/status/1646546253650558976?s=2

https://twitter.com/sheng_zh/status/1645459835071385605?s=20

Feeling inspired? Drop us a line and let us know what you liked.

Like all surgeons, we are always looking to get better. Send us your M&M style roastings or favorable Press-Gainey ratings by email at ctrl.alt.operate@gmail.com