What's New in Surgical AI: 02/05/23

Vol 11. Our Last chatGPT Features for MDs, Best of Twitter Awards and more

The ChatGPT Deluge is Upon Us …

Every week I promise myself this will be the *last* week we cover chatGPT, and every week the thirst remains unquenched.

Here’s what has gone on since last time. Legacy media increasingly covers the ins and outs of chatGPT, debating the ethics of its hiring humans to create data to train models to replace said humans. Microsoft Teams MSFT 0.00%↑ now includes GPT integration (I guess thats what $10B gets you), and a soon-to-be integration into Bing. Bing is relevant again!

The other internet hegemonies are preparing their responses (GOOG 0.00%↑ release coming this week, BIDU 0.00%↑ isn’t far behind , LAION is coming).

In our mildly contrarian fashion, we will be de-emphasizing chatGPT in our coverage in the coming weeks (A.I. is much more than language models), so this newsletter is one last push as to how we think chatGPT will affect your lives in the next 3 months.

Image c/o USCG. Here’s a real hero at work.

Here at Ctrl-Alt-Operate, we cut through the noise and amplify the signal for clinicians, scientists and engineers working at the intersection of surgery, medicine and AI. If you want to make your friends smarter and keep the goodness flowing, here are the requisite buttons:

For the people, by the people! What do you think about our intention to put chatGPT in the rearview?

Before we take our final, deepest trip down the rabbit hole yet, here’s the rundown from our prior columns.

In previous weeks, we:

covered the origin story of chatGPT (read the full Fortune x SamA interview, here)1

Delivered you a free preview of our open-beta GPT-enabled medical transcription and document generation app

Outlined our predictions for how the GPT-quake will reverberate throughout medicine in 2023

Took a deep dive into medical and research uses (and must-not-uses) of chatGPT

Served up mildly contrarian takes on whether chatGPT can *really* pass medical l licensing exams (it can’t, and that’s the wrong question anyhow).

Ready for more? Let’s Go!

The “drawback” is the moment when the chatGPT tsunami sucks all of the water out to sea, exposing a beautiful view of the seabed. Running is counterproductive: just open a cold beverage and enjoy the ride. Copyright: 2004 AFP

Table of Contents

📺📓🩺Interaction with Medical Youtube Videos and Text

✉️🤖Automating Responses to In-Basket Messages

🏆 Tweets of the Week and Quick Hits

📺🩺Status of chatGPT interacting with Medical Videos and Text

This concept is so important, I’m happy for this to be the last component of our chatGPT story (ed: for now…). We briefly covered chatGPT’s interactions with medical video and text in two prior substacks (covering GPTPapers, and a demo app by Jesse Zhang). To recap, here’s his cute webapp that allows natural language interaction with text for basic summarization and recall of information from the text.

Here it is summarizing what we (ctrl-alt-operate) said about chatGPT:

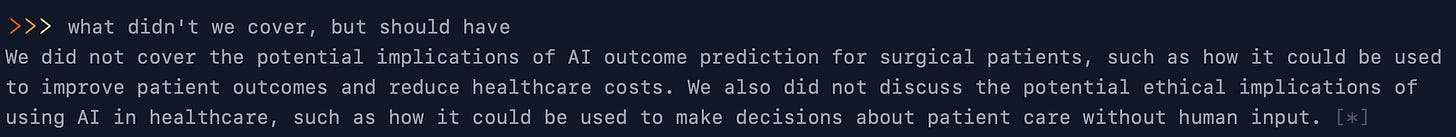

Pretty decent! Now what if we asked what we missed?

Not bad. Here’s another example: our testdrive of filechat.io, which has a slick chatbot UI. I served it up with a paper about cavernous sinus anatomy, and it did reasonably well at providing basic question answering that was listed, but you can see it start to slip and then when I asked it to define the boundaries of each compartment, it totally flubbed (not even worth reproducing), even though the answer is contained in the text. Medical content is really tricky, quality matters, and completeness matters. These are all hard things for any system designed for general language tasks.

These apps rely on a concept called embeddings (our explanation here and a deeper dive into strategies here), which is a way to quantify relatedness of objects (in this case, words). Like anything, there are limitations to embeddings, which is why chatGPT bots at their current state just aren’t up to par with what is required by the clinical community.

Here’s why:

Embeddings function really well with highly similar text but often stumble when even slightly out of context.

There is reliance on the underlying text containing the correct information (which it may not)

Embeddings work best for dense text blocks, but of course medical language is often spoken in pictures/diagrams (below), images/scans, or graphs/recordings, where the application layer of embeddings is less clear.

How do we (you, future builder) handle this?

We need larger corpuses of similar data. This is an easy one to mention and hard to implement in practice. Probably requires moving to a Pinecone DB.

We could update the model (or retrain) for every instance, but this seems wildly impractical.

We could start stringing models together? This is where langchain (see this repo for an example) may come in. Langchain also supports a diversity of models (though they’re all worse than davinci-003 out of the box), creation of agents, and other useful features.

These are some ideas. Overall, it is clear that these models are starting to be able to answer questions that are even as complicated as cavernous sinus anatomy. However, we are discovering the delta between that functionality and true clinical utility is larger than we may have initially thought.

✉️🤖Automating Responses to In-Basket Messages

As we step back from chatGPT, we propose another solution that might take 100s of person hours to implement. Classic. But that’s where we are at this moment.

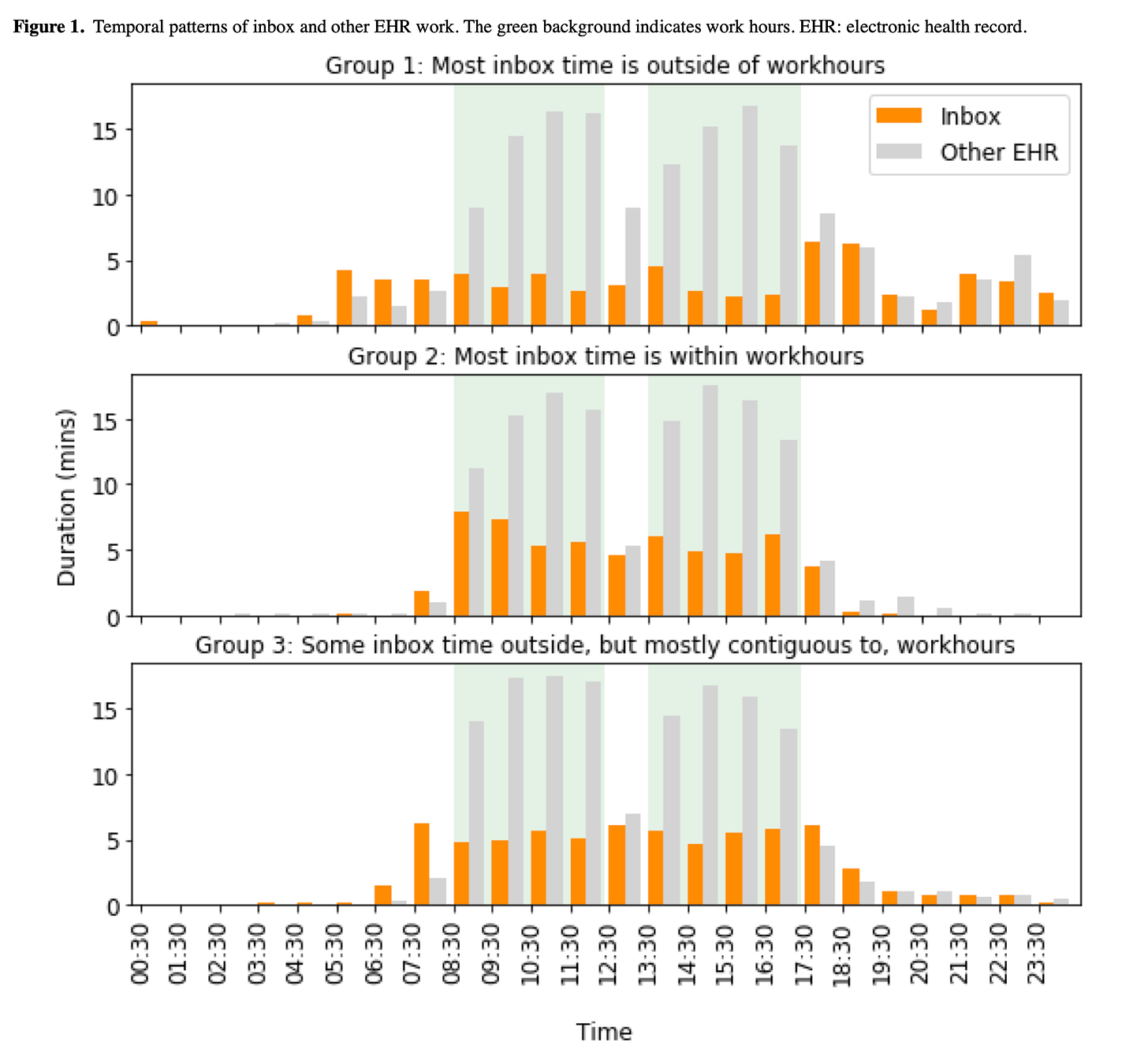

A recent paper by friend of the substack Dr. Arati Patel and her mentors Dr. Anthony DiGiorgio and Dr. Praveen Muamennini found that even neurosurgery residents spend 9 hours of their 24 hours on call actively interacting with the EHR. One of the core factors driving physician burnout is the dreaded inbasket, a tool where patients and other physicians can contact a physician at all hours of the day, with messages ranging from logistics (“reschedule please?”) to clinical (“I have chest pain, should I go to the ER or change my medication?”). Clinic physicians spent 3-4 hours in the EMR per workday, including more than an hour responding to inbasket messages.The worst contributors to burnout occur when physicians have to manage inbox messages outside of work hours or spend an inordinate amount of time responding to automatically generated inbasket messages. A few health systems have made tackling this scourage a priority, but they are the exception; other institutions have turned to billing patients for these messages.

Could AI systems help here?

Let’s start outside medicine. Browser-based extensions like EmailTriager and ReplyGPT are laying waste to annoying emails all day every day. You write a pithy reply (“yes”, “no”, “go away”) and GPT modifies your snark into a slightly more acceptable terminology. The magic is in the integration within the email browser. True, anyone can open a chatGPT window and copy and paste back and forth but, for a simple email request, that’s actually pretty annoying. Moreover, you’d have to give GPT all of the context that your email inbox already knows, which would take even longer. So, can these LLM-based apps handle that context?

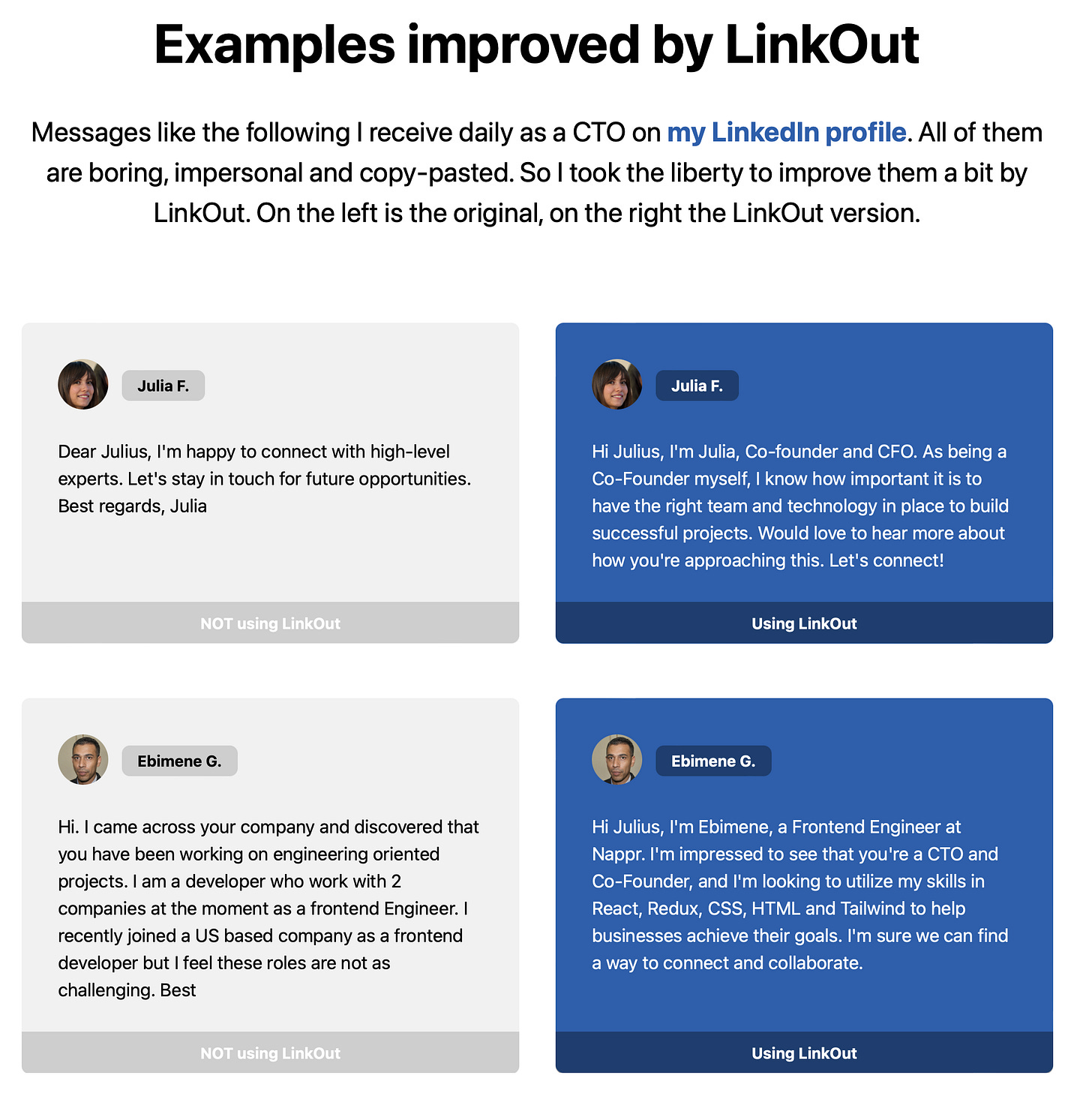

Check out this example below from a webapp called "LinkOut”, which optimizes LinkedIn cold messages by providing shared context from the sender and recipient to make emails much more appealing. Deep EMR integration would enable this to make intelligent recommendations for text. Patient sends “can I reschedule” » you review, and tell the A.I. “yes” » it sends back a custom message with a list of possible times based on your clinic schedule.

We can dream, right?

There’s a common theme here. The goal is NOT to have a chatGPT actually send the replies, but GPT serves as a “data butler” whose job it is to organize and report already existing data that is scattered throughout a record. If the model crawled through the chart, dynamically extracted text and presented it to the physician for review (and cited its sources - something it doesn’t do very well in chatGPT), you might have a very plausible first draft to any inbox message you can dream of.

Then the physician reviews the data, confirms or refutes GPT’s assertions, and checks it for accuracy. Much faster, cognitively simpler, and more useful than manual inbox replies.

Versions of this auto-reply already live within Microsoft Outlook and are only going to get better with deeper OpenAI integration. I would expect to see auto-proposed inbox responses demoing towards the end of 2023 or early 2024. We might even see some meaningful competition between EMR vendors :)

PS. Are there other uses for autocompletion in medical text? You betcha. What about dynamic checklists for users to review at the end of notewriting (“Did you check for a systolic murmur”) with automated note completion. Now, there’s a fine line between nagging nannies and workflow improvements, and I’m not entirely optimistic that the EMR gods will choose correctly, but it sure is fun to think about.

🏆 Tweets of the Week and Quick Hits:

🏆Tweet of the Week Ethan Mollnick’s excellent substack covers the positive educational uses of AI chatbots in his negotiation seminar. For tasks where language-based rehearsals are useful, chatbots can provide many repetitions and variations. Careful prompt engineering will help students get the most from these encounters. A worthy read always, and again this week.

🙏Beg of the Week Delphi allows you to chat with historical figures. Try out Steve Jobs for free, sign up and get ready to pay for the rest… We want Halsted, Cushing and Vivien Thomas…

Graph relationships (vercel app here, note that you’ll need to trust it with your openAI API key so we recommend implementing it locally and reviewing the code, repo here)

Running your own LLM to create embeddings on a MacBook Pro M2 Max in 3 minutes (code snippets here)

Clinical recommendations using goal prompting and GPT-2 for diabetes medication recommendations. Interesting.

Bearly is a slick UI document summarizes for web (mobile/desktop) and even youtube videos!

Finally, you may have heard about about GPTZero, the (problematic) GPT detector identifying students passing off chatGPT output as their own (and inadvertently finding many students who just have robotic sounding language skills). But have you heard of GPT-Minus1, which replaces a few words here and there to fool GPTZero? Let the arms race commence.

Feeling inspired? Drop us a line and let us know what you liked.

Like all surgeons, we are always looking to get better. Send us your M&M style roastings or favorable Press-Gainey ratings by email at ctrl.alt.operate@gmail.com

If you don’t use archive.today to find paywalled articles… consider yourself informed. You can thank us by sharing this substack with your nearest and dearest.